Last week…

In Part 1 of of Digital Twin Series we laid the foundation of what Digital Twins are and the Tools and Technologies behind the concept.

This week…

Being based in Nexus in the centre of Leeds next door to the University of Leeds continues to give Slingshot a unique opportunity to access world leading facilities and researchers. One such facility within 100m of our office is Virtuocity. Virtuocity is the University of Leeds’s world leading Centre for City Simulation forming a strategic part of one of the UK’s leading and internationally acclaimed departments for transport research. The centre boasts some of the world’s most advanced virtual urban environment and vehicle simulators:

Our expert

Richard Romano is a Professor in the Institute for Transport Studies at the University of Leeds and Chair in Driving Simulation. He has worked in the area of Interactive Simulations and Virtual Reality (VR) for over 30 years and specialises in bringing humans into a virtual environment to evaluate prototypes of various physical and planned systems.

In this article he sits down with our Chief Technical Officer to discuss how Digital Twins give freedom for the end users of a product to have a greater say in its design, how simulation will always require trade-offs, and how the success of the Digital Twin relies on Virtual Reality not being 100% realistic.

To start, what do you mean by Digital Twins?

In order to make good evaluations of any prototype in virtual reality we need to compare it with a baseline. So we are always creating a Digital Twin of a baseline situation and then comparing that to a prototype. In the end, we do a lot of virtual prototyping and then make comparisons between prototypes and with a baseline.

When we create a Digital Twin, or when we create something in simulation, we’re very focused on what types of testing and experiments will be performed because we want to spend our efforts on what’s important. It’s driven by what our research questions are, what our experiments are, and then we will create as much of the Digital Twin as we require.

There are two parts to this: There is the environment in which we are going to test the product and that environment could be created very realistically. I’m working in Transport so it could be a very realistic geo-specific road network or it could be something that we call geo-typical where the road network matches the rules of the road and things like that, but we’ve intentionally not made it a specific place, the individual roads still match the rules of the road and therefore in a way they are a digital twin at some level but the overall assembly of those is such that we can do better testing. What that means is we have very specific segments of road put it together so that the participant can experience all these different situations quite quickly.

On the product side, it is a very interesting situation because we could be taking on a product at any level of development including something that’s already been fielded that they want to make improvements on. We might have a physical specimen that we can do measurements and testing on and bring that into the virtual world. We could instead have a prototype that’s artistic or has a functional or requirement-based specification that we import into the virtual world, making all kinds of assumptions by working with our customer to create something accurate enough for the research. Or we could have an engineering drawing with all the engineering data which makes it easy to import into our virtual environment.

Could you give an example of the type of products and projects that you work with and describe the virtual environment that you are talking about?

Yes. Products for us can be all kinds of things. A product could be a new intersection where we could be importing geometry of that intersection or that roundabout that a designer has already made. Another product for us could be a heads up display that displays distance to the vehicle in front; the customer provides just a single requirement. In this case we leverage our human factors capability to design the product and incorporate it in the virtual vehicle, driving on roads that are geo-typical so that we can test that product and make sure it works well.

Are there other benefits for your test users not recognising those roads layouts that they’re in? Does it give you different data?

From that perspective the good thing is making sure that everybody has an equal experience when they drive in the simulator. We want everybody to either not know the road or everybody to be an expert with that road.

David: So Leeds city centre is not a good idea?

Exactly. Because some people will know it like the back of their hand and other people will get lost as they try to drive around. For me that’s how I was the first year and a half that I was here!

For those who aren’t familiar with VirtuoCity, could you describe your different simulators?

Right now we have three different immersive simulators:

One is our driving simulator, the most advanced in the UK. It has a five metre by five metre track underneath the motion base that can re-create accelerations up to 0.6g, a full vehicle cab on top of the motion base provides the real physical environment. If we’re testing interior systems we’re going to put the real physical systems inside and the participants are going to be experiencing the real car in a virtual environment. A lot of things nowadays are digital so if it’s touch screen based then of course we can set up any system we want and test it. That’s our driving sim.

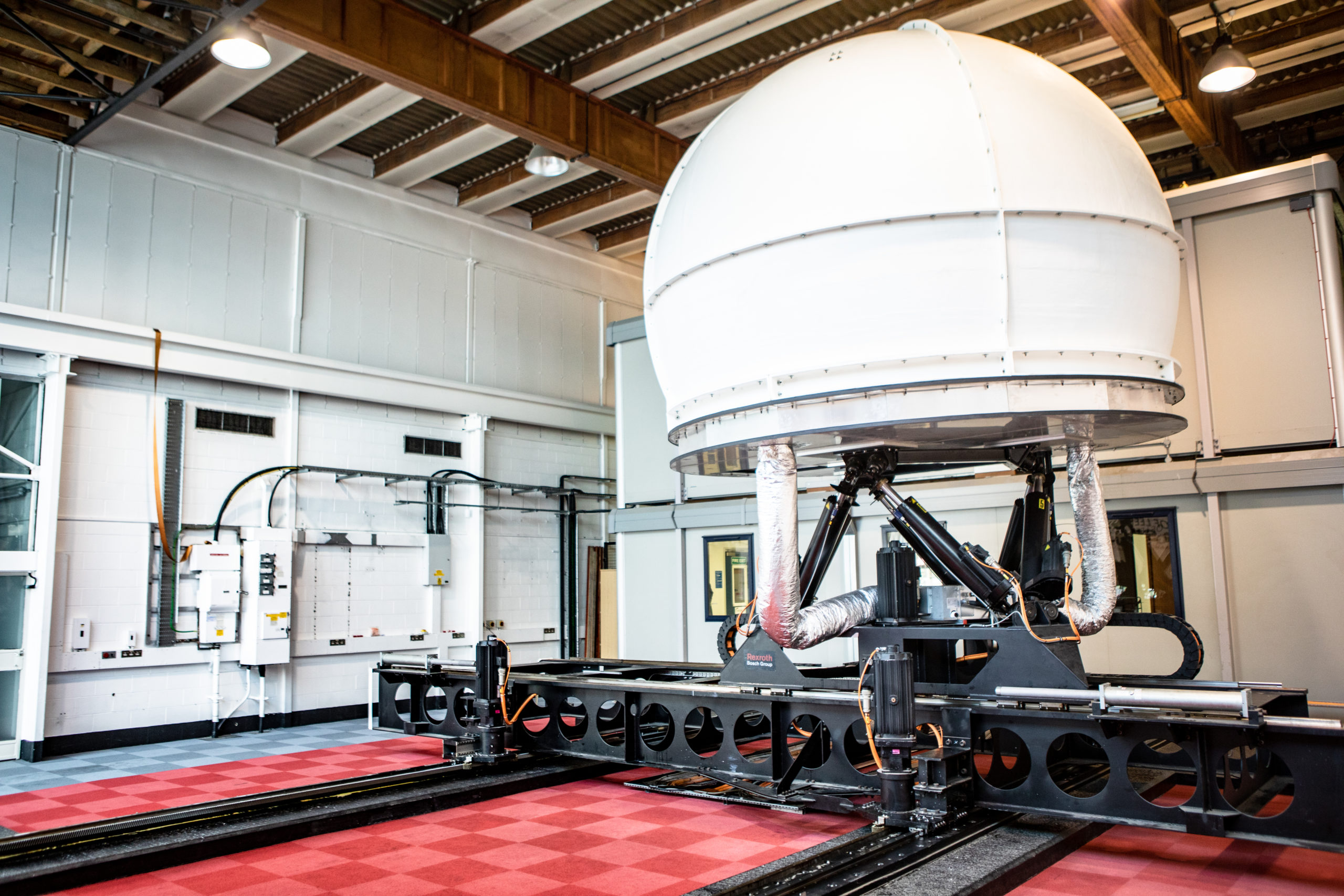

And then we have our HGV, or truck simulator (pictured above), which is very similar to the driving simulator but with a truck or lorry interior. If we do any studies in that simulator we always bring in Lorry drivers because we need experts. We want everybody coming from the same driver pools: levels of experience, and somebody who has never driven a lorry doesn’t make any sense.

Our third one is our pedestrian simulator, HIKER, which is by far the most advanced in the world of its kind, in which we can test a whole variety of things; one of them is pedestrians crossing roads and interacting with traffic. A nice thing about HIKER is that we can set up a mixture of virtual and augmented reality. For example if you think about a car we have a fixed interior layout, whereas in the HIKER it can be a completely virtual environment. We can have a significant portion of the car represented as a 3D model and only have a few elements that are actually physical.

We’re always looking for the right mix of physical and virtual elements. We want the minimum number of physical elements to perform the research and then everything else is virtual, making it very easy to switch out and change things around, create things that don’t exist. Our performance as humans interacting with physical elements is always going to be better than virtual ones so it ends up being a balance.

What does 0.6g mean?

When most people drive they drive at a certain G-Force:

- Accelerate at 0.2g

- Decelerate at 0.4g

- Turn at about 0.3g

It’s only going to be if you’re racing, for example if you’ve got your Tesla and you’re trying to do the quarter mile, that’s probably going to be accelerating at 0.4g and if you’re breaking hard in an emergency stop you might be at 0.8-0.9g.

So most situations are covered other than very hard decelerations where you wouldn’t get the full acceleration experience.

181d28Is there a way to take your HIKER lab and your driving simulator and connect them together?

We’re doing those experiments now. As we get further into applying virtual technology to test products we need a powerful virtual environment to test in and its relatively easy to create the physical elements of the buildings, roadways and traffic with virtual vehicles that are being controlled by the computer.

But while the computer controlled vehicles give you a reasonable sense of immersion and they interact with you enough to help you set your speed, we don’t get good interactions between those computer controlled vehicles and our human driven car. If you think about how difficult it is for an automated vehicle (AV) to drive around in the real world that’s the same type of challenge that we have for every single one of our virtual agents in our driving sim. We want to be able to create those interactions as realistically as possible so instead of creating those interactions by using a computer for the other vehicle as we drive our vehicle, what we’re doing instead is connecting multiple humans together and letting them interact. Then any merging or crossing situations, where you really get down to the brass tacks, compares two humans interacting.

The particular challenge you have with connecting these simulators together isn’t that a brand new concept?

It’s all brand new. So it’s all a challenge. The programming needed to connect the vehicles together is something that’s been done for a long time. The military developed this technology in the 80s because they wanted to have training situations where they’re doing team building and they need to get multiple people together in all their different vehicles interacting and working as a team.

So the networking is relatively easy; some people still spend a lot of time working on that, but it’s more of a solved problem. The harder parts are setting up convincing scenarios for research purposes. What the military does is they just tell everybody what’s going on and that they’re supposed to stay together in their team and if they don’t interact with each other appropriately then they’re failing: for example one jeep is supposed to drive 20 feet behind another one; if they’re not then they’re failing. In research we don’t want to be telling the drivers what they’re supposed to be doing in an experiment.

David: You want to observe how they’re behaving naturally in that environment

Exactly. That’s a perfect way to put it, we’re used to doing very controlled experiments with a single driver which you can contrast with naturalistic studies where you go out in the real world and you just watch people drive around. We now are working in this domain between those two where we want some level of naturalistic interaction to develop but we still need some level of control to make sure that we can compare different designs with each other.

What are the biggest limitations or hinderances for your test subjects?

It’s kind of a stack up of many things. We can do any one thing well: Making all things work well at once that’s very difficult.

We know how to simulate a braking manoeuvre very well but having that braking manoeuvre laid out in such a way that you’re interacting with say a human controlled crossing car, that’s when it becomes difficult. I always say that simulators are part-task and you need to decide what you want to use it for and then design the simulator to do that task the best.

Now as we try to integrate vehicle simulators there’s so many more challenges because, yes the networking is well defined and pretty well implemented, but if anybody in the experiment goes offline you have a problem. If you have 100 computers vs 1 computer the chances that one of those 100 computers is going to turn off is high and when it does it’s a big deal.

Also, if we care enough about an interaction between two people that we put two humans in the simulator and have them interact – that puts a lot more pressure on having the reactions for each of their vehicles be correct from a visualisation perspective because it’s part of the close looped interaction. It’s even more difficult to have a pedestrian interacting with a vehicle because to make that pedestrian look realistic enough to get the experimental results that we need is extremely difficult.

We have all these research questions but what it really comes down to is how good a simulator do you need to perform the test? There are headlines that say “We just need to make it as good as we can” well that’s a waste of effort. People always get in our simulators and go “well this doesn’t look as good as a game” and that’s intentional. In a game, there are really cool graphics and you go “Oh, look at that! That’s really cool!” and that’s the last thing we want to happen in our simulator. We want the simulator to cause the driver to act the same way they would in the real world which means not gawking at technology.

If you were able to deliver all the other aspects with the photorealism do you think that would help or do you think it would distract the user?

It’s one of those things you have to be very careful about. There’s this thing called the uncanny valley, as you make things more and more realistic, close to the point of perfect realism, it actually becomes scary for people. You could look at a person in VR say “Well that’s really neat but they look like a zombie or there’s something going on there” that’s not normal.

Is that what you would call Simulator Sickness?

Getting simulator sickness is a similar type of thing because what happens is: you are in a simulator and it feels realistic, and you’re in there for 20 minutes, and you forget that you’re in a simulator, and you start feeling like you’re in the real vehicle and you go along… all it takes is one miscue that trips you up and reminds you that you’re in a simulator then that can make you sick.

As you get the realism closer and closer to 100%, the little things, nuances trip you up, whereas if you are in a cartoon world you get over the fact that it’s a cartoon world. But we can still do a lot of cool testing and it’s more forgiving.

It’s the same with photorealism and all the different graphics in games for example. I’ve been working on very high fidelity photorealism since about 2003: the techniques were invented a long time ago but we were finally able to run them in real time around 2008 and it’s the same techniques that are used today in games. You can’t use many of the cool techniques yet in a driving sim such as real time shadowing, things that are so exciting and so great to look at. That shadowing has what we call artefacts and even if 95% of the time there’s no artefacts, in the 5% of the time when there are artefacts then people will be looking at it which distracts them from driving. So we have to turn that style of shadowing off.

There always has to be trade-offs in simulation.

There are trade-offs that we have to make to make sure that our outcomes for certain experiments are the way we need them to be. Again the trade-offs are always there, if the question is about shadows then we have to have shadows and get them right by controlling the situation, the scenario, so all those 5% cases don’t come up in that experiment. There always has to be trade-offs in simulation.

Thinking about the next 5-10 years if there was one innovation you could solve as your big challenge, what would you choose as your favourite?

Probably my favourite will be an output of the development of automated vehicles, we’re going to be learning a lot about how humans behave and our models of humans in the simulator are going to improve a lot and we are going to be able to create better virtual environments using these models.

People ask what the pay-off is going to be after having spent all this money on automated vehicles? All this research on AVs is helping improve AI to track humans, predict human intentions, and understand humans – all that can also apply to medical work, building robots that can help you around the house. So our knowledge of how humans behave and how AI should interact with humans is increasing all the time and I feel that we’re going to get a large pay-off from that because of the developments of automated vehicles.

Other favourites: I think the graphics cards are starting to get to the point that we’ll be able to render far more complicated ray-traced solutions and we’ll have much better graphics. The projectors and the display technologies are getting cheaper and once we get to eye-limiting resolution, someone with 6-6 or 20-20 vision will be able to see everything the same as they would in the real world.

I think the last thing that we’re going to see some time in the future is direct stimulus of the vestibular system to the point where we won’t need a motion base to feel like we’re moving around in virtual reality. And that of course is really cool – it’s probably going to require some kind of really simple implant at some point to do that but it’s going to be worth it. In the near term probably we’ll stimulate, externally, certain aspects of the feeling of motion that currently require us to make a simulator as big as 5 metres and that part we’ll do with electrical waves to your head, to your vestibular system, and then our motion base in the simulator can be much smaller – it will only be a third of a metre. The motion base will move and you’ll be able to have a head set on – the experience is going to be really incredible.

How would you answer someone, who was completely disconnected from the concept of Digital Twins, if they asked you what a Digital Twin was?

A Digital Twin is a digital representation of a physical or planned system that in my case we use to bring in members of the public to experience and get their opinions of the design of those systems, get their feedback so that we can design them better. In addition to their verbal feedback we can understand how they will perform with these systems and make sure that they’re going to be safer and higher performance, as well as being more enjoyable. So as we build new products, new cities, we want them to be better than the previous ones.

And then if that person then asked you what that means for them personally?

What it means for them as an individual is we’re going to be able to create a more economically viable, more enjoyable product and they’re going to have more of a voice in its design.

Is there anything else about Digital Twins that particularly interests you?

The idea of the scalability of Digital Twins. Our goal is to get the end user involved in order to do humancentric design. We have to get as many end users involved as possible. However to place them in the virtual world in the appropriate context we have to create models of an entire city or an entire country to understand the demand, to understand what it’s going to feel like at an individual street level. We need a lot of other modelling and a lot of other aspects of the Digital Twin at all these different levels to make the street-scape relate properly to a high-level policy decision.

Within that context how would you test a situation where you want to have maybe 100 people in your virtual environment? Because you can’t put 100 people in your HIKER lab…

As I said previously everything’s a part-task simulator, a single-task simulator, so the HIKER lab is not designed to do something like that. Soon we will be working with a company who are using a large warehouse which supports a lot of people interacting all with headsets on and experiencing a virtual place and being able to interact with each other.

But one of the big questions is: why are all these people there? Where are they going? What’s their motivation? So those are the things we have to have before we design and make a test. If you’re talking about a city square and you want to do an experiment my first question would be: How many people are in the square? That leads to Why are they there? Where are they going? To work? What’s the demand? What’s the travel patterns? If the aim of the experiment is say doubling the number of trains coming in to Leeds station…well when are they arriving?

There are so many other aspects of a Digital Twin that we rely on others to analyse and generate models of that we can then connect with and bring the human into.

And finally, assuming the SMART City Expo is still going ahead this year, what would be the coolest thing you could anticipate seeing there this year?

I really enjoyed the mixed reality concepts that some people showed last year and I would like to see those improved. Something that helps ground the data that is shown.

At an Expo it’s not so much about getting individuals into a virtual environment and experiencing that, I think it’s a similar environment to when you have a set of city counsellors and you’re going to bring them in and have them make decisions on a transport design; you want to be able to rapidly show them all the different configurations, get that information into those counsellors heads so they can make the right decision and that may or may not include immersive VR.

There’s just so much information and so many different ways to visualise it and that’s what I’d like to see in the next SMART Cities because the data behind the system, behind the scenes, is very complex. We’re collecting more and more data, we have better and better models but how do we make it so that we can understand the benefits? Humans are good at looking at visual data and seeing differences and understanding things and that’s what we need to get better at with large data sets and I would like to see better examples of that at SMART Cities.

More in the Series

- What are Digital Twins today?

- Humancentric Digital Twins

- Personal Digital Twins

- Agent-based Modelling for Digital Twins

- Digital Twins and Decision-Making: Leeds City Council

- Digital Twins and Decision-Making: Urban Regeneration

- The Role of Simulation in Decision-Making

- Investing in Digital Twins

- End of Open Plan Offices? Smart Solutions for a Safer Office

- Data Insights, Digital Twins and Dashboards

- Digital Twins for Beginners

- Series Summary